LoRAs, or Low-Rank Adaptations, are revolutionizing the way diffusion models generate specific styles and subjects. By modifying the weights of cross-attention layers within a diffusion model, LoRAs allow creators to fine-tune outputs for unique artistic or technical needs. In this article, we’ll explore how LoRAs work, their practical applications, and step-by-step guidance for integrating them into workflows using tools like ComfyUI.

Table of Contents

What Are LoRAs?

LoRAs (Low-Rank Adaptations) are small models designed to guide diffusion models toward specific outcomes, such as reproducing a unique style or subject. They achieve this by decomposing large matrices of data into smaller, lower-rank matrices, which require less storage and computational power. This makes LoRAs an efficient and scalable solution for fine-tuning diffusion models without the need to retrain the entire model.

Key Characteristics of LoRAs:

- Data Efficiency: LoRAs store significantly less data than full diffusion models, focusing only on the parameters necessary for adaptation.

- Cross-Attention Layer Modification: LoRAs adjust specific layers within the diffusion model to achieve desired results.

- Flexibility: LoRAs can be applied to various domains, including art, image generation, and technical modeling.

Practical Example: Using LoRAs in ComfyUI

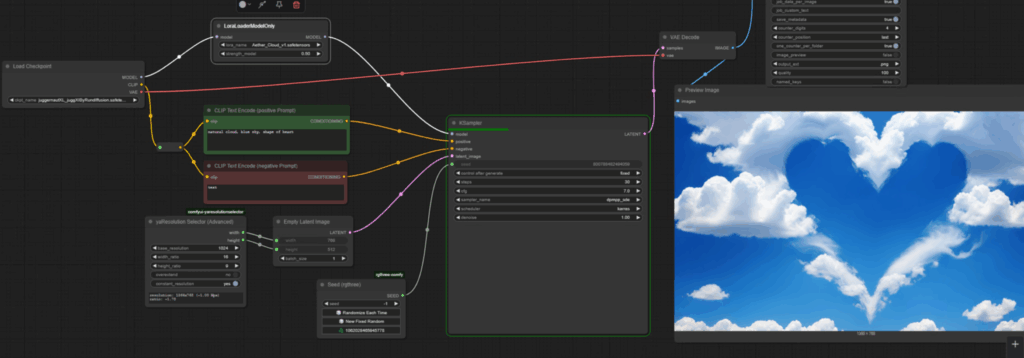

To demonstrate the power of LoRAs, let’s walk through a practical example using the Aether Cloud LoRa, which is specifically designed for SDXL models.

Step 1: Setting Up the Workflow

In ComfyUI, start by creating a basic text-to-image workflow using the Juggernaut XL model. This model produces high-quality clouds, but with the addition of a LoRa, the output can be refined further.

- Load the LoRa:

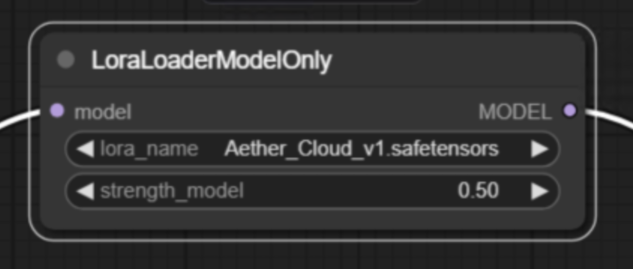

- Use the LoraLoaderModelOnly node to load the LoRa model.

- Connect this node between the load checkpoint node and the KSampler node.

- Adjust the Model Strength:

- The model strength parameter controls how much influence the LoRa has on the output.

- Start with a default strength of 1 and adjust as needed.

Step 2: Comparing Outputs

With the LoRa loaded, queue the workflow and compare the results:

- Without LoRa: The clouds generated by Juggernaut XL are realistic but lack artistic flair.

- With LoRa (Strength 1): The output becomes more abstract, reflecting the unique characteristics of the Aether Cloud LoRa.

To find a balance between realism and abstraction, reduce the strength to 0.5 and re-run the workflow. This adjustment blends the intrinsic properties of Juggernaut XL with the artistic style of the LoRa.

Step 3: Increasing Detail

For more detail, increase the number of inference steps in the KSampler node:

- 20 Steps: Produces decent results but lacks fine details.

- 30 Steps: Adds convincing details and enhances the overall quality.

Advanced Techniques: Inpainting with LoRAs

LoRAs can also be used for inpainting, allowing you to modify specific areas of an image while preserving the overall composition.

Example: Creating a Heart-Shaped Cloud

- Set Up the Inpainting Workflow:

- Use the CropAndStitch nodes and draw a mask over the area to be modified.

- Adjust parameters such as denoise strength (e.g., 0.8) to ensure the underlying image provides a rough shape for the new generation.

- Modify the Prompt:

- Use a specific prompt like “two birds kiss each other in the sky.”

- Run the Workflow:

- Queue the workflow and compare the inpainted result with the original image.

This technique enables precise control over the output, blending the characteristics of the LoRa with the base model to achieve stunning effects.

Best Practices for Using LoRAs

- Experiment with Strength Parameters: Adjust the model strength to balance the original model’s properties with the LoRa’s influence.

- Optimize Inference Steps: More steps often lead to better detail but may increase processing time.

- Combine with CLIP Text Encoder: Some LoRAs require connection to the CLIP text encoder for optimal performance.

1 thought on “C31: Fine-Tuning Diffusion Models with LoRAs: A Step-by-Step Guide”